The enterprise network landscape is undergoing a seismic shift, driven not by gradual data growth but by an explosion of demand that legacy infrastructure can no longer support. For Chief Information Officers and Network Architects, the question is no longer if they should upgrade to 400 Gigabit Ethernet (400GbE) backbones, but how quickly they can execute that migration to maintain competitive parity.

- The Data Tsunami: Why 100G is No Longer Enough

- Technical Architecture of 400G Networks

- Financial Analysis: The ROI of 400G

- Strategic Implementation Guidelines

- Phase 1: Core and Spine Upgrades

- Phase 2: High-Performance Compute (HPC) Clusters

- Phase 3: General Enterprise Access

- Brownfield vs. Greenfield Deployment

- Overcoming Implementation Challenges

- Future Proofing: The Road to 800G and 1.6T

- Security Considerations for High-Speed Networks

- The transition to 400G network backbones

- Comprehensive 400G Market Trends and Analysis

- Vendor Landscape and Silicon Diversity

- The Role of Optical Transceivers in Cost

- Cloud-Native Networking and SONiC

- Operationalizing 400G: Monitoring and Telemetry

- The Global Context: 400G in Emerging Markets

- Final Thoughts: The Risk of Obsolescence

As we move through late 2025, the proliferation of Generative AI, high-frequency trading platforms, and hyperscale cloud services has turned network bandwidth into a primary business constraint. This guide provides a detailed analysis of the return on investment (ROI), technical necessities, and strategic advantages of upgrading your network backbone to 400G. We will explore how this transition reduces Total Cost of Ownership (TCO), optimizes power consumption, and future-proofs your infrastructure against the looming demands of 800G and 1.6T standards.

The Data Tsunami: Why 100G is No Longer Enough

For the past decade, 100G uplinks were the gold standard for high-performance data center interconnects (DCI) and enterprise backbones. However, the architecture that supported the digital workloads of 2020 is rapidly crumbling under the weight of 2025’s applications. The bottleneck has shifted from storage and compute to the network fabric itself.

The Impact of Generative AI and ML Workloads

The most significant driver for 400G adoption is the rise of Artificial Intelligence and Machine Learning (AI/ML). Unlike traditional web traffic, which is predominantly North-South (client to server), AI workloads generate massive amounts of East-West traffic (server to server). Training Large Language Models (LLMs) requires thousands of GPUs to synchronize parameters in real-time.

Recent industry reports indicate that AI clusters are increasingly network-bound. When a network cannot deliver data fast enough to the Graphics Processing Units (GPUs), those expensive processors sit idle, wasting capital investment. 400G Ethernet provides the necessary “fat pipe” to ensure high utilization rates for AI infrastructure. A legacy 100G network simply cannot handle the burstiness and sustained throughput required for modern model training without inducing severe latency.

The Convergence of IoT and 5G Backhaul

Beyond AI, the enterprise is dealing with an influx of data from the Internet of Things (IoT) and the requirements of 5G backhaul. As edge computing moves data processing closer to the source, the backhaul connections returning aggregated data to the core data center must handle higher capacities. 400G serves as the critical aggregation layer, collecting traffic from multiple 100G or 50G access points and funneling it efficiently into the core.

Technical Architecture of 400G Networks

Understanding the business value requires a brief look at the underlying technology. The leap from 100G to 400G is not just a speed increase, it is a fundamental change in signal modulation and efficiency.

PAM4 Modulation: The Game Changer

Previous generations of Ethernet, such as 10G and 25G, utilized Non-Return-to-Zero (NRZ) modulation, which transmits one bit of data per signal symbol (either a 0 or a 1). To achieve 400G using NRZ would require an impractical number of lanes or clock speeds.

400G adopts Pulse Amplitude Modulation 4-level (PAM4). PAM4 utilizes four distinct voltage levels to encode two bits of data per symbol (00, 01, 10, 11). This effectively doubles the data rate for the same bandwidth compared to NRZ. For enterprise buyers, this technology means you are getting more data throughput per fiber strand, which directly impacts your cabling costs and fiber plant utilization.

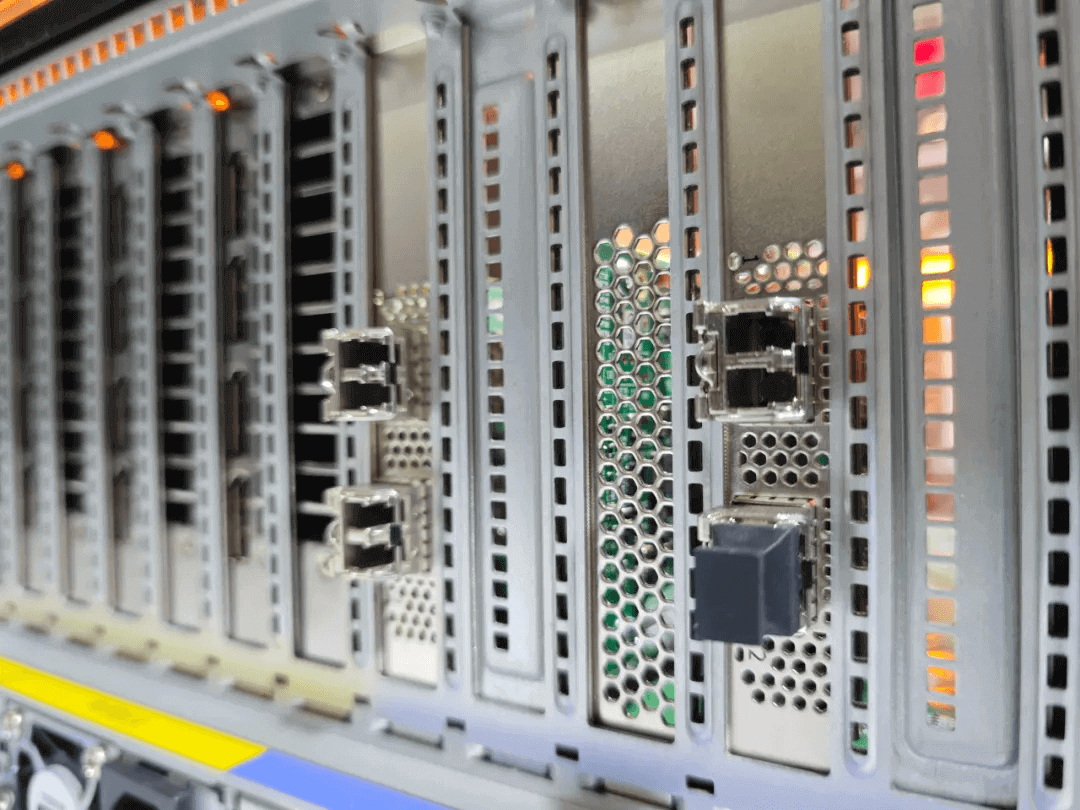

Form Factors: QSFP-DD vs OSFP

When selecting hardware for 400G upgrades, network architects generally choose between two primary transceiver form factors.

- QSFP-DD (Quad Small Form-factor Pluggable Double Density): This is the most popular choice for enterprise data centers because of its backward compatibility. A QSFP-DD port can accept legacy QSFP28 (100G) and QSFP+ (40G) modules. This allows for a phased migration where you can upgrade switches today but continue using existing optics until budget allows for a full upgrade.

- OSFP (Octal Small Form-factor Pluggable): Slightly larger than QSFP-DD, the OSFP form factor is designed with integrated thermal management. While not backward compatible with QSFP28 without an adapter, OSFP is often preferred for hyperscale environments looking toward 800G and beyond, as its thermal design handles the higher power requirements of future generations more effectively.

Financial Analysis: The ROI of 400G

The initial Capital Expenditure (CAPEX) for 400G switches and optics is higher than 100G equipment. However, the business case for 400G is built on Operational Expenditure (OPEX) savings and density efficiency.

Lower Cost Per Bit

When you analyze the cost per gigabit of throughput, 400G is significantly cheaper than aggregated 100G links. Deploying four separate 100G connections requires four switch ports, four transceivers, and four fiber pairs. A single 400G connection consolidates this into one port and one transceiver pair. Over a large data center fabric, this consolidation reduces the number of managed endpoints by a factor of four, simplifying network management and reducing the “cost per bit” of data transmission.

Energy Efficiency and Sustainability

Power consumption is a top-tier concern for modern data centers, both for cost control and Environmental, Social, and Governance (ESG) compliance. While a single 400G module consumes more power than a single 100G module, it consumes far less power than four 100G modules delivering equivalent bandwidth.

Industry data suggests that upgrading to 400G can improve power efficiency by over 30% per transmitted bit. For a hyperscale or large enterprise facility with thousands of ports, this translates to megawatts of power saved annually, resulting in substantial reductions in electricity bills and cooling requirements.

Maximizing Rack Density

Real estate in the data center is expensive. 400G switches allow for massive increases in rack density. A standard 1RU (Rack Unit) switch can now support 32 ports of 400G, providing 12.8 Tbps of throughput in a single pizza-box form factor. Achieving this same throughput with 100G equipment would require multiple chassis, consuming far more rack space and requiring complex leaf-spine interconnect cabling. High-density 400G switches enable “flatter” network topologies, reducing the number of tiers in the network design and lowering latency.

Strategic Implementation Guidelines

Migrating to a 400G backbone is a complex operation that requires careful planning. We recommend a phased approach to mitigate risk and manage budget outlays effectively.

Phase 1: Core and Spine Upgrades

The most logical starting point is the network core or the spine layer of a leaf-spine topology. These are the aggregation points where bandwidth congestion is most acute. By upgrading the spine switches to 400G, you alleviate bottlenecks for all downstream traffic. During this phase, you can use breakout cables to connect new 400G spine ports to existing 100G leaf switches (e.g., one 400G port breaking out to four 100G links).

Phase 2: High-Performance Compute (HPC) Clusters

Once the core is upgraded, target specific server racks that host high-performance workloads, such as AI training clusters or storage arrays. These endpoints can saturate 100G links easily. Deploying 400G Top-of-Rack (ToR) switches in these specific zones ensures that your most critical applications have the headroom they need.

Phase 3: General Enterprise Access

The final phase involves upgrading general-purpose server access. For many standard enterprise applications, 100G or even 25G to the server remains sufficient. However, as 400G becomes the standard at the core, the economics of 100G optics for access layers become even more attractive, as the technology matures and scales down.

Brownfield vs. Greenfield Deployment

For Greenfield (new) deployments, starting with 400G is the only logical choice. It avoids the immediate technical debt of installing legacy 100G equipment that will need replacement within 24 months.

For Brownfield (existing) environments, the focus should be on interoperability. Ensure your chosen 400G switch vendor supports the specific breakout modes and FEC (Forward Error Correction) settings required to talk to your existing 100G NICs and switches.

Overcoming Implementation Challenges

Despite the benefits, the path to 400G is not without hurdles.

Thermal Management

400G optics run hotter than their predecessors. Network architects must verify that their existing racks and cooling solutions can handle the increased thermal density. This might require upgrading rack fans or adopting liquid cooling solutions for high-density AI clusters.

Cabling Infrastructure

400G often requires higher quality fiber to maintain signal integrity over distance. While OM4 multimode fiber works for short distances (up to 100m for SR8), longer runs will likely require Single Mode Fiber (OS2). Reviewing your current fiber plant is a critical pre-requisite. Using dirty or low-grade fiber with PAM4 signaling will result in high bit-error rates and link instability.

Supply Chain and Lead Times

While availability has improved since the chip shortages of previous years, demand for high-end networking silicon (like the Broadcom Tomahawk series or NVIDIA Spectrum) remains high due to the AI boom. Enterprise buyers should plan for lead times and work closely with Value Added Resellers (VARs) to secure inventory for planned upgrades.

Future Proofing: The Road to 800G and 1.6T

Investing in 400G today is also about preparing for tomorrow. The networking industry is already looking toward 800G and 1.6T Ethernet.

The good news is that the architectural changes required for 400G (such as PAM4 adoption and high-density fiber layouts) lay the groundwork for these future speeds. 800G is essentially two 400G signals bonded together or running at higher baud rates. By establishing a 400G-ready fiber plant and cooling infrastructure now, you are effectively “paving the road” for the next decade of speed upgrades.

For organizations currently building AI supercomputers, some are skipping 400G and looking directly at 800G. However, for the vast majority of enterprise backbones, 400G represents the “sweet spot” of price, performance, and availability in the current market.

Security Considerations for High-Speed Networks

With great speed comes the need for faster inspection. Upgrading your backbone to 400G means your firewalls and Intrusion Prevention Systems (IPS) must also be able to inspect traffic at these line rates.

Many legacy security appliances will become choke points if placed inline on a 400G link. Enterprises must budget for high-performance security gateways or utilize “service chaining” where only suspicious traffic is diverted for deep packet inspection, while trusted traffic flows at line speed. Additionally, network visibility tools (packet brokers and taps) must be upgraded to support 400G interfaces to ensure you don’t lose visibility into network traffic.

The transition to 400G network backbones

The transition to 400G network backbones is a critical infrastructure investment that enables the next generation of digital business. It resolves immediate bottlenecks caused by AI and cloud workloads, reduces the long-term cost of data transmission, and increases the energy efficiency of the data center.

While the initial investment is significant, the cost of inaction is higher. Sticking with 100G infrastructure in an AI-driven world will lead to application latency, poor user experience, and an inability to compete with faster, more agile rivals. By following a strategic, phased deployment plan and focusing on TCO rather than just CAPEX, IT leaders can turn their network from a utility into a competitive asset.

Key Takeaways for Decision Makers

- AI is the Driver: If you are deploying AI, you need 400G. The East-West traffic demands of model training necessitate it.

- TCO Wins: 400G lowers the cost per bit and power consumption per bit, offsetting higher upfront hardware costs.

- Compatibility Matters: Choose QSFP-DD for easier migration from 100G; choose OSFP if you are building a massive, future-ready AI cluster.

- Check Your Fiber: Ensure your fiber plant (Single Mode vs Multimode) is certified for PAM4 signaling requirements.

- Plan for Security: Ensure your security stack can keep up with 400 Gbps flows to avoid creating new bottlenecks.

Comprehensive 400G Market Trends and Analysis

To further substantiate the business case, we must look at the broader market trends influencing 400G availability and pricing. As of late 2025, the market has matured significantly, moving 400G from “bleeding edge” to “leading edge.”

Vendor Landscape and Silicon Diversity

The ecosystem for 400G is robust, with major competition driving down prices.

- Broadcom: Continues to dominate the merchant silicon market. Their Tomahawk and Trident chipsets are the engines behind many “white box” and branded switches.

- Cisco: Their Silicon One architecture has successfully unified routing and switching silicon, offering high-performance 400G options for both service provider and enterprise core roles.

- NVIDIA: With the acquisition of Mellanox, NVIDIA has cornered the market on high-performance AI networking. Their Spectrum-X Ethernet platforms are specifically optimized for AI clouds, offering features like RDMA (Remote Direct Memory Access) over Converged Ethernet (RoCE) which is essential for GPU-to-GPU communication.

- Arista Networks: Continues to be a favorite in the hyperscale and high-frequency trading sectors due to their low-latency switches and deep buffer capabilities.

This healthy competition means that enterprise buyers have leverage. We are seeing aggressive pricing strategies for 400G ports as vendors fight to capture the “AI retrofit” market.

The Role of Optical Transceivers in Cost

A significant portion of the cost in a 400G upgrade lies not in the switch chassis, but in the optical transceivers. The market has seen a divergence in optic types:

- SR8 (Short Reach): Uses 8 pairs of multimode fibers. It is cheaper but requires massive amounts of cabling (MPO-16 connectors).

- DR4 (Datacenter Reach): Uses 4 parallel single-mode fibers. It is becoming the standard for leaf-spine connections up to 500m.

- FR4 (Fiber Reach): Uses Wavelength Division Multiplexing (WDM) to send 400G over a single duplex fiber pair (LC connector). This is the most expensive optic but offers the cheapest cabling cost because it reuses standard fiber pairs.

Strategic Tip: For data center retrofits, FR4 optics are often the best TCO choice. Even though the optic itself costs more than an SR8 module, the ability to reuse your existing LC fiber plant saves massive amounts of labor and material costs associated with re-cabling for MPO connectors.

Cloud-Native Networking and SONiC

The rise of 400G has coincided with the adoption of open networking operating systems like SONiC (Software for Open Networking in the Cloud). Many 400G switches are now “white box” capable, allowing enterprises to run open-source software on commodity hardware. This disaggregation of hardware and software can further reduce the TCO of a 400G rollout, decoupling the enterprise from expensive vendor lock-in and annual licensing fees.

Operationalizing 400G: Monitoring and Telemetry

Deploying the pipe is only half the battle; managing it is the other. 400G networks transmit data at such high speeds that traditional SNMP (Simple Network Management Protocol) polling is often too slow to detect micro-bursts that cause packet loss.

Streaming Telemetry

Modern 400G switches support streaming telemetry. Instead of the management server asking the switch “how are you?” every 5 minutes, the switch pushes real-time data about buffer utilization, packet drops, and interface statistics to a collector. This is vital for AI workloads where a micro-second of congestion can stall a training job that costs thousands of dollars per hour.

When writing your Request for Proposal (RFP) for 400G equipment, ensure that In-Band Network Telemetry (INT) or similar deep-visibility features are a mandatory requirement. You cannot manage what you cannot measure, and at 400 Gbps, problems happen faster than human reaction times.

The Global Context: 400G in Emerging Markets

While North America and Europe lead in 400G adoption, we are seeing rapid uptake in the APAC and MEA regions. This global standardization simplifies supply chains for multinational enterprises. You can now standardize on a single 400G architecture for your data centers in New York, London, and Singapore, simplifying spare parts management and engineering training.

Final Thoughts: The Risk of Obsolescence

In the technology world, “good enough” is a rapidly depreciating asset. 100G networks are currently in the “good enough” phase for general office traffic but are woefully inadequate for the data-intensive applications that drive competitive advantage today.

Upgrading to 400G is not merely an infrastructure project; it is a business enablement strategy. It unlocks the full potential of your server and storage investments, enables the deployment of private AI clouds, and provides the agility required to pivot as market demands change. By starting your migration planning today, you ensure that your network remains the foundation of your business success, rather than the bottleneck that holds it back.

The time to architect for 400G is now. The vendors are ready, the standards are ratified, and the workloads are waiting.